As long as intentionality remains an exclusively human trait of which AI is deprived on the global scale, the human factor will remain cybersecurity’s weakest link.

Artificial Intelligence is helping both attackers and their targets – shifting the nature of attack surfaces without being able to weaken the intention of those wishing to benefit from its crashes and failures.

Cyber defense expert will perhaps become the ultimate profession in charge of continuously understanding and monitoring what’s happening under the hood with AI.

Should we expect a digital world entirely administered by so-called “strong” Artificial Intelligence, capable of ensuring its own infrastructure’s security and reliable enough to fully ensure that of human users? A true singularity moment in which there would be absolutely no interaction of the human operator with the artificial brain – whether for its maintenance or its evolution.

Before that horizon, which seems far away and would not necessarily be socially desirable, the human operator – user, administrator, or designer – will unfortunately remain in capacity of compromising (intentionally or not) the integrity and the efficiency of the multiple AI processes in charge of our digital security.

Where are we now in 2018?

AI entered the realm of cybersecurity a few years ago. Machine Learning tools, for example, are used for threat-identification by many service providers. But as soon as they become available, these techniques feed the cat-and-mouse game between attackers and their targets.

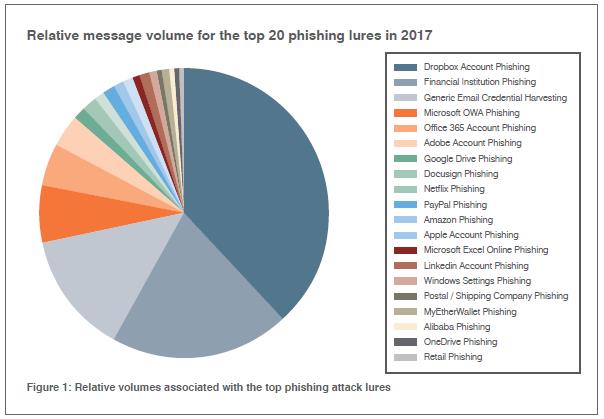

On the attackers’ side, the goal is – on the one hand – to compromise the data used to feed “Big Data” tools (Adversarial Machine Learning), and – on the other – to perform social engineering helped by AI tools which multiply the efficiency and the volume of phishing attacks. In addition to emails, social networks, and instant messaging, all digital channels used by humans and their digital personal assistants are in turn affected: Communications with chatbots, vocal commands for connected objects, images, videos, and augmented reality.

At the heart of cyberthreats, it is clear that the basic mechanisms of social engineering are at play – and will be for a while, and will continue to involve the following tactics:

- Create a sense of urgency

- Imitate trusted brands or third-parties

- Take advantage of our natural curiosity

- Exploit mindless reactions to usual events such as software updates

The Human Factor 2018 – People-Centered Threats define the Landscape

On the defenders’ side, the challenge is immense. The multiplication of new attack surfaces – already including all connected objects and in the future, autonomous vehicles – opens up a whole new field of risks, which is the object of all attentions.

Let’s draw a panorama of cybersecurity tools and processes which currently arm themselves with AI algorithms:

- Against attacks on connected objects, one can think of the securing of cyber-physical systems with AI processes embedded in chips

- Against intrusion and malicious acts on digital and electrical infrastructures: Image identification, tagging, and classification using deep neural networks (deep-learning).

- Against social engineering tactics used by attackers: Machine Learning embedded in smart personal assistants to combat threats directed at their masters by flagging malicious or phishing messages as unusual events.

- Against attacks through the phone: Conversational bots to filter out attempts of harassment and fraud.

- Against new attacks on Cloud services: Cooperation of traditional “Cloud App. Security Broker” solutions with massive databases that keep track in real time of attacks evidences and the components of attack chains used (sources, targets and their communication profile, vectors and vulnerabilities used, traces, links).

The Impact on Cyber-expertise profiles

In the end, can we find an unequivocal advantage to AI in the field of cybersecurity?

Yes, if we reason in terms of jobs and careers in the sector.

According to the last “Cybersecurity Jobs Report”, there will be 3.5 million vacancies in the domain of cybersecurity worldwide by 2021. In the meantime, the steady increase in the volume of attacks – and necessarily, of false alerts (“false positives”) – will more than saturate the daily workload of people in charge of our digital society’s security.

Emerging “cognitive cybersecurity” platforms that help cybersecurity analysts in managing threats are therefore the main hope for the coming years and decades. For the time being, AI tools simplify the work of analysts by allowing them to spend less time researching and analyzing. But their experience and precise domain expertise on the mechanisms of the attack and the exploited vulnerabilities remain crucial – just like human aspects that feed intentionality, and the attack’s objectives.

With AI more and more integrated into job processes in cybersecurity, gains in time and efficiency will come to the rescue of lacking human resources.

In the medium run, it is quite possible that cyber-expert will become the ultimate profession purely specialized in digital technologies – requiring a detailed mastery of the AI-powered machines and robots who will, in the end, be the ones dealing with data, networks, and machines.

Laura Peytavin

Laura Peytavin is a CISSP-certified pre-sales engineer with Proofpoint based in Paris. She is co-president of the Telecom ParisTech alumni association and co-animates its Cyber Security group

The opinions expressed by guest bloggers are their views and do not necessarily reflect the opinions of Corix Partners.